MCP Part I - Core Concepts, Past, Present And Future Of Agentic systems

This exploration of the Model Context Protocol (MCP) is presented in three distinct parts:

- Part I, the current article, introduces the foundational concepts of MCP.

- Part II will delve into a specific implementation, demonstrating a custom host leveraging Google’s VertexAI API and the Gemini model.

- Part III will showcase a practical custom server implementation tailored for a particular use case.

The parts are linked, but loosely coupled: you can read them separately.

About Agentic Systems

Before starting, let’s establish some ubiquitous language and clarifications that will be used throughout this series of articles:

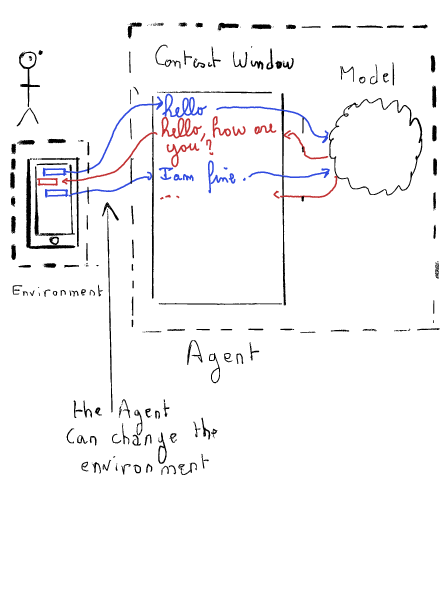

An agent is a general concept. It is an entity that can interact with an environment. An environment provides information that makes it observable by the agent. It also provides capabilities that allow the agent to interact with it. By interacting with the environment, the agent will change it. These changes provide usefulness to the agent (an agent that cannot change any environment is useless).

When talking about agents, we also often talk about autonomy. Autonomy is a variable property of an agent—it is the property the agent has to act on its own. There can be various levels of autonomy. The more autonomous the agent is, the less human interaction it requires to act.

Note: I won’t go into details here, but do not confuse agents and workflows. A workflow is a pre-programmed execution process. It is an automated representation of a human process. See it as an execution graph. Even if one link can embed cognitive automation, it is not to be confused with the concept of agentivity we are using here.

About environments

Even if the current AI (r)evolution is about the reasoning process, let’s consider the most crucial aspect that will make these AIs useful to humankind: environments.

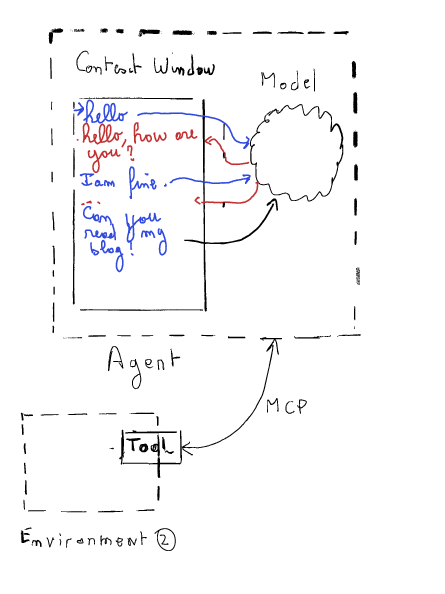

To be pragmatic, I will illustrate this part with the most used environment nowadays: a chat window. In a chatbot (such as Claude, Gemini, or ChatGPT), the model is an LLM (Large Language Model), and it generates textual information (it is generative AI). Its goal is to continue a prompt and fill a context window.

Note While the chat window is a common example, it should be mentioned that an environment can be a database, an API, a physical system (through sensors and actuators), or any other system that provides information and actions.

The context window is like a text book (of a limited size) into which you store your conversation. The chat interface is a type of user interface, a representation of the environment. You can write in the chat window… and the agent can also write in this window.

Note: This is oversimplified for the sake of explanation. The context window could be considered as being part of the environment, but I keep it separated to ease the explanation that will come in Part II of this series (where we will dig into the agent).

The environment is closed.

As humans, we are also agents. And we interact with many environments.

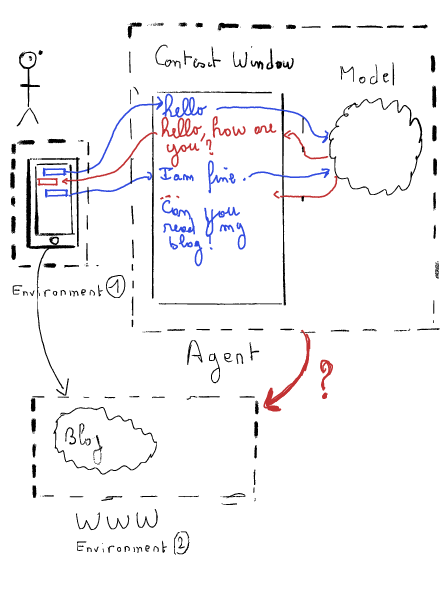

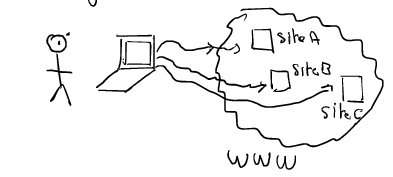

For example, the World Wide Web (WWW) can be seen as an environment, and the browser is the tool that allows us to interact with it. By clicking on a link, we change what is presented. Moreover, in the digital world, we are exposed to multiple capabilities that allow us to interact with the system—e.g., adding an item to a shopping cart or booking a hotel.

So, to bring even more usefulness to the agent, we should give them the ability to interact with a wider environment than the chat window.

As humans, we could then delegate cognitive tasks and use them as proper assistants. We could then be free from doing toil (remember, we are talking about agents, not workflows).

The problem, therefore, is: how to make the agent interact with the environment… any environment?

The solution to the problem

Giving an agent the ability to interact with a digital environment is not straightforward. Actually, the model can understand an intention; it can more or less reason, but most of the time, the only environment it can interact with is the chat window.

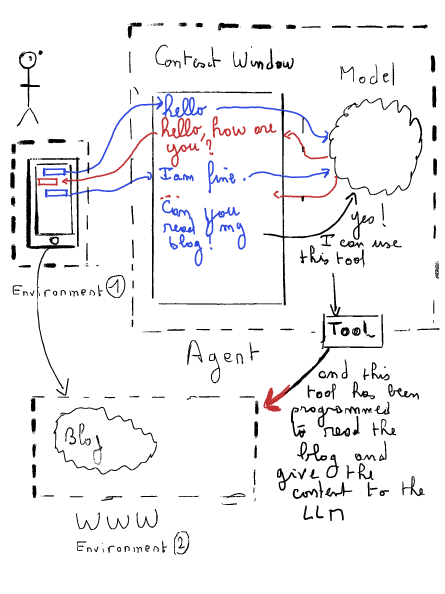

Therefore, we need to provide new capabilities that it can trigger as required.

These capabilities are presented as digital tools and commonly exposed as functions (there can be other interface though).

Each tool is then specialized to perform a certain task.

How the agent can use the tool ?

Now the question is: how can the agent use the tool? And how does it choose the right tool if we provide many of them? Keep in mind that the interaction with the model is, as of today, always through natural language.

Therefore, we need to set up some glue (a mechanism that translates the agent’s natural language request into a structured call to the tool) to give the agent the ability to run the tool.

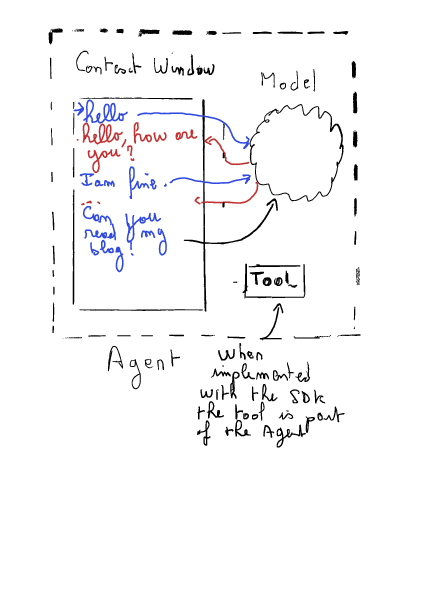

Most of the generative model execution engines come with a Software Development Kit (SDK) that allows interacting with and extending the capabilities of the model under the hood. (In the second part of this series, I will explain these concepts by implementing an agent based on Google’s VertexAI services).

But this system is tightly coupled. It requires a heavy modification of the agent in terms of computer code. The tool is therefore hardcoded within the agent.

Separating the tool would provide a huge set of benefits:

- The tool has nothing to do with AI (modeling, science, and so on). It is pure software engineering. Therefore, it can live its own lifecycle independently of the agent (it could be used by any model or even by a human).

- The tool can move closer to the environment. It can act as an entry point to the environment. The ownership is close to the business. The business is in charge of exposing the resources of the environment and the actions it wants to provide to the agents.

The Web could, for example, be turned into an ecosystem of tools that could be used by any agent to perform a task.

Model Context Protocol as a proposed standard

To create this ecosystem of tools that allows interaction with the most environments possible, there is a need for a standard: a proper way for the agent and the tools to communicate.

Think of it like the World Wide Web – without HTTP/HTML, the web wouldn’t work as it does today. This standard needs to define how tools are described and how agents can interact with them.

I exposed a function {{function_name}} that does {{description}} and that requires those arguments:

- {{argument_name}} is a {{type}} that represents {{description}}For example, a tool might be described using a structured format like JSON. Here’s a simplified example of how a weather tool could be represented:

{

"function_name": "get_weather",

"description": "Retrieves the current weather for a given location.",

"arguments": [

{

"name": "location",

"type": "string",

"description": "The city or region for which to retrieve weather data."

}

]

}You get the drill. Then this description is serialized into a computer language that allows it to be transferred accross a network and therefore accessible over the Internet.

This is, in essence, what MCP is about: a standard of exposition and communication.

MCP in details

So, MCP is a standard to enhance the agent and give it tools that it will be able to use out-of-the-box.

Ubiquitous language of MCP

MCP comes with its own vocabulary. I will expose some of its concepts here:

- The tools we are talking about are called MCP Servers.

- The application running the LLM is a host.

- The host communicates with the MCP Server by implementing an MCP Client.

What an MCP server exposes

There is more to the MCP protocol than just a set of vocabulary. The protocol defines a set of standard types of three capabilities that a server can provide.

- Resources: a server can expose resources of an environment (“a list of available products,” “the current stock price,” or “a user’s profile.”).

- Tools: a server can provide functions to perform certain specific tasks (“calculate the distance between two points,” “send an email,” or “book a flight.”)

- Prompts: a server can provide pre-written templates. It can feed the host with more knowledge to help it forge its reasoning (“a template for writing a product description,” “a set of rules for formatting a report,” or “a knowledge base about a specific topic.”).

This standard makes it easy to extend the ability of an agent.

I won’t go into the details of the implementation of what MCP is (this will be the topic of Part III of this series, where we will talk about Remote Procedure Calls, JSON, and other IT topics). I will close this article with a set of convictions I have.

Conclusions and convictions

This standard makes it easy to extend the ability of any agent. It changes the business paradigm and is, according to me, the enabler of the next digital revolution.

The first revolution was the Internet. The Internet brought omnichannel. A business could expose its services, and users could interact with it from their couch.

The second revolution was the smartphone that brought true digital services (accessible with digits) and nomadism: services from everywhere. But it was still the responsibility of the user to do cognitive routing to use one service or another (shall I book the train first, or the hotel… is it compatible with my agenda …).

The next revolution is that you won’t do those cognitive tasks by yourself. You will delegate it to an assistant.

But which assistant will earn your favor? The assistant that gets the most favor will win a business war: it will hold millions or even billions of potential users that it can route to a business depending on the will of the model. This new paradigm could lead to significant shifts in how businesses provide services and how users interact with them. The competition to create the most useful and trusted AI assistants will likely be fierce: Which host will be the most used? Which data will it collect to enhance its usage of the better tool… the one from your favorite company… or the one provided by the company that pays the most?

Next:

- Part II: “In Part II, we will dive into a practical implementation of an MCP host, demonstrating how to connect to Google’s VertexAI API and use the Gemini model. You’ll see how to set up the agent and integrate it with external tools.”

- Part III: “Part III will focus on building a custom MCP server for a cybersecurity use case. We’ll explore the technical details of setting up the server, exposing resources, and implementing the communication protocol.”